Safety vs Censorship: Watchers’ Community Moderation

Why is moderation so crucial for platforms? How does it influence customer behaviour and loyalty? What do recent studies claim on the connection between safety in digital space and business growth? And how do we handle this?

One of the first questions we hear from companies interested in Watchers’ embeddable social platform is how to keep communications in publicly available community chats safe.

They put it this way: If we let users interact openly on our site or in our app, how can we protect ourselves from:

- toxic attacks between users and toward the brand,

- spam/scams,

- attempts by competitors to lure the audience away with their offers?

Why is this truly critical?

- Online aggression drives customers away. Already, 41% of adults in the USA have personally encountered online harassment; people either leave or reduce activity, hurting engagement metrics.

- Spam/scam equals direct losses. More than half of all global email traffic is spam, and the annual cost to businesses from processing and the consequences of spam is estimated at $20.5 billion. It’s more difficult to calculate potential damage for social environments, but obviously, it is substantial as well.

- Competitive “invasion” is more real than it seems. Brandjacking—when competitors deliberately enter someone else’s community to advertise their services—remains legal and, according to marketing analysts, in 2025, “continues to be a significant threat to digital communities,” even though exact incident statistics are rarely disclosed.

- Users expect protection from the brand. In the European segment of the annual Microsoft Global Online Safety Survey-2025, 60% of respondents admitted they cannot always distinguish safe content from harmful and rely on platform “safety-by-design” solutions.

We started building moderation simultaneously with the first version of our community chats because others’ experience showed that without clear rules and an effective yet reasonable filtering system, healthy dialogue collapses in a matter of weeks. Spam and scams harm brand reputation and finances, while toxicity drives away “normal” users who come to exchange ideas and support.

Our principle: effective, healthy moderation instead of blind censorship

We are still convinced that any viewpoint should have a place as long as it stays within respectful communication boundaries. Yet a platform must:

- Reduce toxicity—otherwise reasonable participants will leave.

- Protect the brand and business metrics from spam and competitors.

- Comply with regulators. For example, the UK Online Safety Act 2023 already obliges services to “take proportionate measures” to prevent priority illegal content and remove it quickly.

Therefore, at Watchers, we speak not about “abstract blocking” but about effective, transparent and ethical moderation, where:

- Technical layers (filters, AI moderation, personal-data masking) intercept up to 95% of unwanted content.

- User self-control and appeals ensure the legitimacy of decisions.

- The brand can view analytics and details in the admin panel and can finely tune filter strictness for different audiences.

This way, we help companies not merely “silence” negativity but maintain a lively and safe community in which customers stay longer, and business metrics grow.

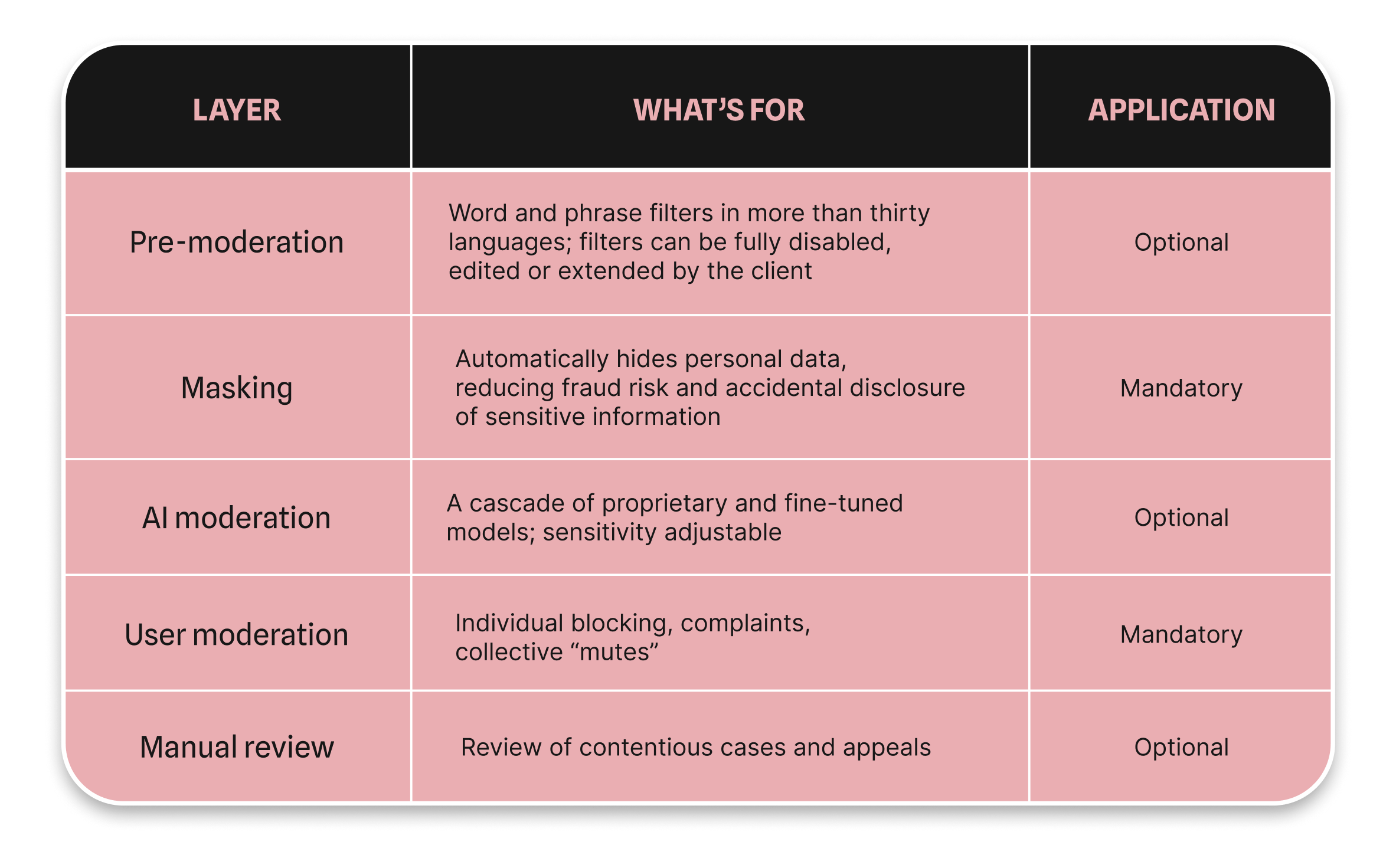

The 5 layers of the Watchers' moderation system

When creating public community chats on their site or in an app, a platform can enable, disable and tune each protection layer so that it matches brand values and audience expectations.

Layer 1. Pre-moderation

At this level, the system checks a message before it is sent. Ready-made word- and phrase-lists exist in 30 languages; they can be completely turned off, edited or expanded with your own entries. We recommend keeping the list brief, focusing on only unequivocally unacceptable expressions. The user receives a warning about a violation before publication.

Layer 2. Masking

Personal data (phone numbers, email addresses, and bank card details) is automatically hidden if a user accidentally enters it in a public chat. This protects privacy and prevents fraud risks: we do not hinder interaction, but we create a safe distance between the personal and the public.

Layer 3. AI moderation

A cascade of proprietary and fine-tuned models analyses text and images for insults, sexual content, violence, spam, scams, and other violations, and publishes the content for different users only after a review that takes a few milliseconds. A company can adjust the sensitivity level or turn this layer off entirely; borderline cases can be forwarded for manual review.

Layer 4. User moderation

Each participant can hide messages from a particular author for themselves if views clash while remaining in the community. This mechanism supports self-regulation, allowing people to stay in the chat without being exposed to content they personally find unwanted. Collective reports also work—if several people complain about one user, that user is automatically put “on pause.”

Layer 5. Manual moderation

We recommend reserving this layer for appeals and rare contentious cases. But if a brand wants to review messages manually, the moderation panel is at its disposal.

Community-chat economics

Community chats on the platform can deliver double-digit growth in LTV and transaction metrics—provided the environment is healthy. Internal analysis by Gainsight (2023) found that customers highly engaged in brand communities renew contracts more often and show greater upsell than users not participating in a community.

However, if basic customer care is absent, chats only expose problems. Strong moderation will not save a toxic core.

So, before opening community chats on your platform, ask yourself: do we respond to customers promptly, are our processes transparent, is there a meaningful core of loyal users who can spark the social effect? If the answer is “yes,” community chats will create exactly the space where ideas emerge, users help each other and recommend your services. Then the embedded social space truly becomes your loudest “wow effect”—a force that makes the brand unbeatable.

***

Please fill out the Get in Touch form if you'd like to integrate community chats with the high-level moderation system into your website or app. We'll help you find the best solution.

Sources

Guidance to the regulator about fees relating to the Online Safety Act 2023 | GOV.UK

‘Real or not?’ The online threat kids are facing at school | The Daily Telegraph

The State of Online Harassment | Pew Research Center

What is “Brandjacking”? | Boomcycle Digital Marketing

What’s on the Other Side of Your Inbox – 20 SPAM Statistics 2025 | DataProt

Emergence of online communities: Empirical evidence and theory | PubMed

Community Engagement Drives Post-Sales Results | Gainsight Community

Boost your platform with

Watchers embedded tools for ultimate engagement